| The Entropy |

|---|

Home |

Summary: We discuss the concepts of information, entropy, and observer's capacity in the discrete measurement space. We derive the propagation of information in discrete j-space. The q-values are introduced.

Notice the word "use". The entropy is

observer dependent. The entropy tells us

about the resources which can not be used for

the assigned task due to inherent limitations of

the system or the observer. The system for

the macroscopic observer ObsM

(humans, v2/c2

<<1), exists based on measurement

made by Obsc (v2/c2~1).

Thus while in thermodynamic systems we may not

notice the observer dependence, the value of the

entropy for a macroscopic observer is dependent

on the observer with maximum capacity to make

precise measurements.  The

Ω is number of measurements and

kij is a constant characterizing

the discrete measurement space or

j-space. In

thermodynamics kij is kB

known as the Boltzmann constant. We

will have to determine what is the

significance of kij in measurement

space. We will do it in a later section.

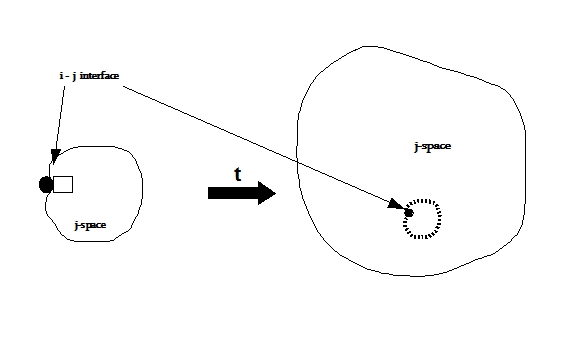

Remember the discussion towards the end of Qbox section! We introduced δi in j-space. The Obsi measures all the information required for a PE1j event in j-space due to δi, in one state. Hence the entropy in this case will be zero. At the same time δi really extends Obsj capacity and as consequence the entropy for the same measurement is quite large for Obsj. This is an important distinction we need to keep in mind. If an observer Obsj is measuring same event, once by performing ΩA measurements and then ΩB measurements with improved efficiency such that ΩA > ΩB . The entropy for each case is SA and SB s.t. SA > SB. Then if we subtract information IAB from low entropy state SB then we get the high entropy state SA . More the entropy less the information available to an observer. Hence the information is also known as negentropy. The amount of information IAB needed for efficiency improvement is,   Progress of Information in j-space We consider now the progress of information from i-space into the discrete j-space as the information measured by the Q-box.  We note that the source, i-space, and the measurement space defining the observer environment, j-space, are separated. For the same source the environment of different observers and their measurements, will vary per capacity of the observers. Therefore it will be prudent to separate the concept of energy which is measured in j-space using Q-box and used to define thermodynamic properties, from the information received from the i-space or the source. In discrete j-space the evolution from <t = 0j > state on the time scale, due to increasing entropy, will degrade the information from its original value I(t = 0j) to I(t = 0j+) such that,   The instant (t = 0j)

represents the instant the measurements in

j-space start and (t = 0j+)

represents the next instant at

infinitesimal time interval. The

exponential function is selected because

its derivative is equal to itself and its

initial value at q = 0 is 1. The

variable q takes only the positive

integral values in discrete measurement

space. Each q-value represents a PE1j

event in discrete j-space. We can

write equation (iv) as, The instant (t = 0j)

represents the instant the measurements in

j-space start and (t = 0j+)

represents the next instant at

infinitesimal time interval. The

exponential function is selected because

its derivative is equal to itself and its

initial value at q = 0 is 1. The

variable q takes only the positive

integral values in discrete measurement

space. Each q-value represents a PE1j

event in discrete j-space. We can

write equation (iv) as, We need to remove the proportionality sign. To do that we determine the initial condition based on Obsi measurements. For Obsi, the observer in i-space, all the outcomes are known and hence at the instant <t = 0j>,  We have obtained the initial condition based on Obsi capabilities. The initial conditions could not be determined precisely with Obsj, the observer pair in discrete j-space, due to their inherent limitation in determining the origin with absolute accuracy. The variation of information in discrete j-space can be obtained from equation (v) as,

We

can rewrite equation (vii) as,

The equation (viii), defines the relationship between the information at a later instant to the initial state information, in terms of PE1 measurements in a discrete measurement space or j-space. The relationship is independent of kij the constant characterizing the j-space. Please note that in deriving equation (viii), we have not considered any physical characteristics of the medium or the observer in j-space except for the observer's capacity to make measurements. The minimum value of q in discrete measurement space is 1. Finally we have to consider what happens when the entropy for an observer is maximized i.e. at what point the observer can not make any more measurements. This part is straightforward. With increasing entropy the observer Obsj is highly unlikely to complete a PE1j measurement. The value of the information measured by the observer in j-space becomes 0j for the observers in j-space at t = ∞j-, where t = ∞j- is the instant at which the entropy is maximized. The notation <∞j-> signifies an instant before the value on the time axis equals ∞j or completion. No further information can be measured hence I(t = 0j+) is null. The condition for this case can be written from equation (viii) as,  The 0j in R.H.S. of above equation is measured as finite by the Obsi, which means that the information I(t=∞j-) is finite, but Obsj can not measure it as it is below his measurement capacity.

Before closing out this section, an

explanation of the entropy relationship is

necessary. When we wrote the equation

(i),

the logarithmic

function is due to additive nature

of entropy, which itself is due to

the assumption of no interaction

between the internal structures of

the molecules. The

conventional entropy S is defined

for a thermodynamic system which

in essence represents a

macroscopic observer ObsM

with capacity v2/c2

<< 1. The

assumption of the lack of

interaction, corresponds to the

absence of the information

about <t = 0j>

or the initial state.

Higher

observer capacity or the

condition ΔS

< 0, represents

the awareness of the initial

state. The first step

towards this awareness, will be

the phenomenological explanation

of the fine-structure

constant. The

phenomenological explanations

require observer awareness of the

initial state or <t = 0j>.

Well, writing equations is good, everybody does it so did we. But then what is the connection to the nature? We shall discuss it next when we describe the fine-structure constant, Alpha. ______________ 1. At

this point we will state a basic

principle without proof as: "Every observer moves towards the state with maximum information, the observer can measure."

Information

on www.ijspace.org is licensed under a Creative Commons Attribution 4.0

International License.

|

"Entropy measures the lack of information about the exact state of a system. - Brillouin" - Zemansky and Dittman, Heat and Thermodynamics, The McGraw-Hill Corporation. "This principle (the negentropy principle of information), imposes a new limitation on physical experiments and is independent of the well-known uncertainty relations of the quantum mechanics." - Leon Brillouin in Science and Information Theory. "His final view, expressed in 1912, seems to be that the interaction between ether and resonators is continuous, whereas the energy exchange between ordinary matter, such as atoms, and resonators is somehow quantized." - Hendrik Antoon Lorentz’s struggle with quantum theory, A. J. Kox, Arch. Hist. Exact Sci. (2013) 67:149–170. "If the world has begun with a single quantum, the notions of space and time would altogether fail to have any meaning at the beginning; they would only begin to have a sensible meaning when the original quantum had been divided into a sufficient number of quanta. If this suggestion is correct, the beginning of the world happened a little before the beginning of space and time." - G. LEMAÎTRE, "The Beginning of the World from the Point of View of Quantum Theory.", Nature 127, 706 (9 May 1931). |